Overview

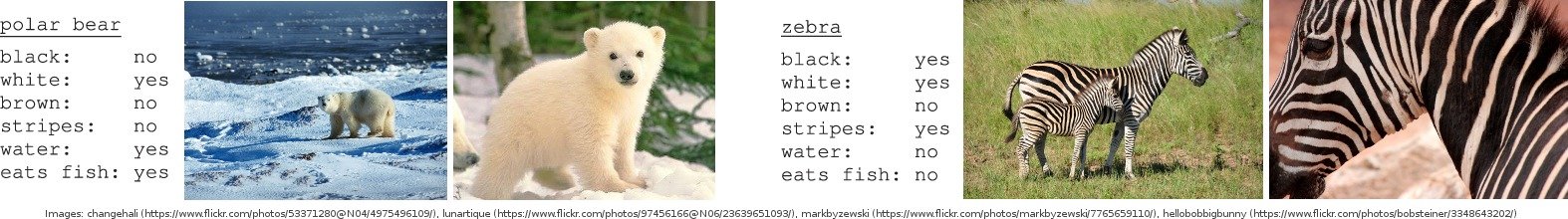

This dataset provides a platform to benchmark transfer-learning algorithms, in particular attribute base classification and

zero-shot learning [1]. It can act as a drop-in replacement to the original Animals with Attributes (AwA) dataset [2,3],

as it has the same class structure and almost the same characteristics.

It consists of 37322 images of 50 animals classes with pre-extracted feature representations for each image.

The classes are aligned with Osherson's classical class/attribute matrix [3,4], thereby providing 85 numeric

attribute values for each class. Using the shared attributes, it is possible to transfer information between different classes.

The image data was collected from public sources, such as Flickr, in 2016. In the process we made sure to only include images that

are licensed for free use and redistribution, please see the archive for the individual license files.

If the dataset contains an image for which you hold the copyright and that was not licensed freely, please contact us at